Twitter has told law enforcement app maker Clearview AI to stop downloading images from its platform to build its facial recognition databases as it violates its policies.

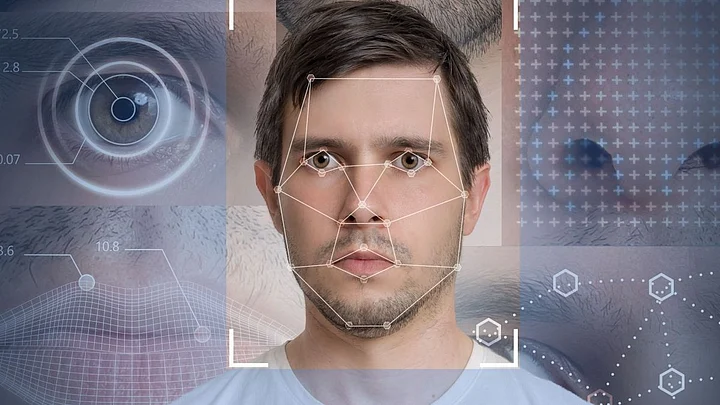

The controversial app uses over three billion images to find a match. These images have been sourced from various social media sites including Facebook, YouTube and Twitter.

According to The New York Times, Twitter has sent a letter to the startup, saying it must stop collecting photos and other data from its platform "for any reason" and delete any photo that it previously collected.

The cease-and-desist letter accused Clearview AI of violating Twitter's policies.

The facial recognition app has made the news recently for its unscrupulous way of collecting photos of people, and hosting them on its server, which is then used by law enforcement agencies like the Federal Bureau of Investigation (FBI and Department of Homeland Security among others.

These agencies have already claimed the Clearview AI app has helped them identify suspects involved in criminal cases.

What’s concerning is that Clearview AI, based out of New York, is not available to the general public, and according to reports, its website becomes redundant when people like you and me search for common people.

“Clearview searches the open web. Clearview does not and cannot search any private or protected info, including in your private social media accounts,” says the information on its website.

But more than common people, women across the world have become concerned about the nature of the app and how it provides information with ease to anybody.

"As the news of this app spread, women everywhere sighed. Once again, women's safety both online and in real life has come second place to the desire of tech startups to create a" and monetize a" ever more invasive technology," Jo O'Reilly, a privacy advocate with Britain-based non-profit ProPrivacy, told Digital Trends.

Privacy, What’s That!

The fact that you have a platform, running on the internet, which can pick any photos it wishes to, is a clear violation of user privacy.

Yes, they might be helping the law enforcement agencies, but does that mean, Clearview AI will never work against common people? With how we’ve seen the likes of Facebook being used for, it would be hard to trust any of the technology companies involved in such practices out there.

Facial recognition software use is being taken to a new level by Indian Police departments, with them using it in different ways to monitor peaceful protests against the Citizenship Amendment Act (CAA) and the National Register of Citizens (NRC). Departments like the Delhi Police and the Hyderabad Police are stopping to take photos and videos of protesters, and the Delhi and Uttar Pradesh Police are even using drones.

The police do not have any authority to mass surveil general public, especially with no law on surveillance as ordered by the Supreme Court in the Right to Privacy judgment, Puttuswamy Vs Union of India (2017). In fact, the right to privacy and right to peaceful assembly are both fundamental rights enshrined in the Indian Constitution.

(With IANS inputs)

(At The Quint, we question everything. Play an active role in shaping our journalism by becoming a member today.)