Following the launch of the "Duplex" system, artificial intelligence (AI) that can mimic a human voice to make appointments and book tables, among other functions, a widespread outcry over the ethical dilemmas were raised by tech critics.

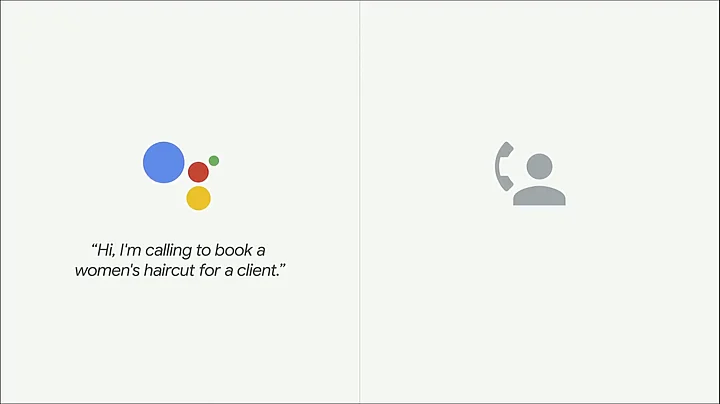

Google CEO Sundar Pichai introduced Duplex earlier this week in the company's annual developer's conference Google I/O and demonstrated how the AI system could book an appointment at a salon and a table at a restaurant. In the demo, the Google Assistant sounded like a human.

Later on, a Google blog revealed that the feature demoed had used Google DeepMind's new WaveNet audio-generation technique and other advances in Natural Language Processing (NLP) to replicate human speech patterns.

Google clarified to The Verge that the experimental system would have a "disclosure built-in" that means that whenever Duplex gets involved in some type of verbal communication with a human at the other end, it would identify that the human is talking to an AI.

We understand and value the discussion around Google Duplex, as we have said from the beginning, transparency in the technology is important. We are designing this feature with disclosure built-in, and we will make sure the system is appropriately identified. What we showed at I/O was an early technology demo, and we look forward to incorporating feedback as we develop this into a productGoogle spokesperson to The Verge

Google’s response comes after tech critics raised questions on the morality of the technology saying it was developed without proper oversight or regulation.

In the blog post, Google had originally said that "it's important to us that users and businesses have a good experience with this service and transparency is a key part of that".

But nobody was falling for that, especially after how craftly the Assistant managed to get the booking done, without the person on the other side getting suspicious about it.

(At The Quint, we question everything. Play an active role in shaping our journalism by becoming a member today.)