If you post “someone should kill Trump”, Facebook will take your comment down. But if you say “let’s hit fat children”, that will not be a violation of the social media website’s guidelines.

This is just one of the findings from over 100 training manuals, spreadsheets and flowcharts accessed by The Guardian, which serves as the blueprint for how Facebook will deal with racism, violence, hate speech, pornography and self-harm.

These “Facebook Files” were made by the website as it faces immense pressure from US and Europe to regulate itself, given its wide reach.

Moderators on Facebook are extremely pressed for time, given the trove of comments they need to go through – they sometimes only have 10 seconds to make a decision.

Facebook cannot keep control of its content... It has grown too big, too quickly.Source

The moderators themselves are confused about the guidelines, as there’s always a grey area over objectionable content, and the most confusing are the guidelines over sexual content.

One of the documents reviewed stated that every week, nearly 6.5 million potentially fake accounts are reported to the website.

The guidelines, published over thousands of slides, may worry critics who argue the service is now a publisher and must take stricter action to moderate hurtful, hateful and violent content by finding and removing it.

But it also concerns the free speech advocates, who are worried by Facebook’s de facto role as a censor.

Both are likely to demand greater transparency.

Evaluation of Comments

Content is evaluated on the basis of credibility. Violent language and content is judged by how plausible the threat is. Facebook acknowledged that “people use violent language to express frustration online” and feel “safe to do so” on Facebook.

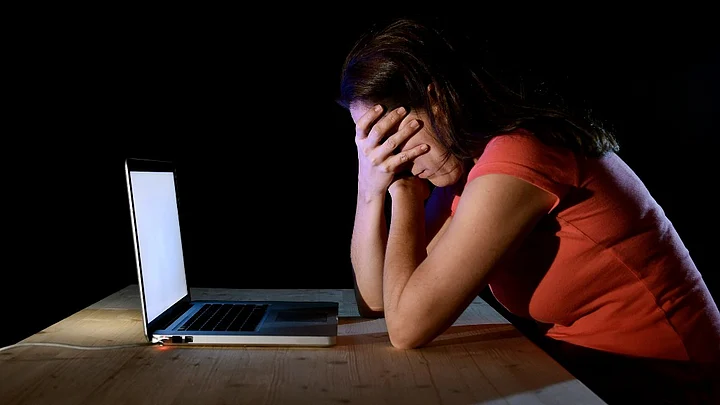

They feel that the issue won’t come back to them and they feel indifferent towards the person they are making the threats about because of the lack of empathy created by communication via devices as opposed to face-to-face.Facebook

Not all violent and disagreeable content violates Facebook’s policies.

In a statement to The Quint, Facebook’s Head of Global Policy Management Monika Bickert said:

Keeping people on Facebook safe is the most important thing we do. We work hard to make Facebook as safe as possible while enabling free speech [...] Mark Zuckerberg recently announced that over the next year, we’ll be adding 3,000 people to our community operations team around the world – on top of the 4,500 we have today – to review the millions of reports we get every week and improve the process for doing it quickly.

Bickert said that there are offensive comments which violate Facebook’s policies in some context and not in others. These are the contexts it is violated in.

To Censor or Not to Censor

While there is divided opinion on what passes as graphic and what doesn’t, live-streaming self-harm and suicide is allowed on Facebook. It’s seen as a cry for help and a starting point for a dialogue on mental health.

It’s also worth noting that recently, a Mumbai student, Arjun Bharadwaj, live-streamed his death on Facebook and authorities were notified about it after the video was seen by his friends. In the US, an unarmed black man, Philando Castile, was fatally shot by police and his girlfriend live-streamed the incident as proof of the growing police brutality in the country.

The video had been deleted by Facebook, but then reinstated, citing the removal as a mistake.

Facebook’s debate around censorship isn’t new – the company had removed the image of the “Napalm girl” from the Vietnam war (later reinstated), as nudity is against the policy. Poet Rupi Kaur’s images of a menstruating woman were deleted before being reinstated over the content being “graphic”.

As Facebook grows in reach, in its hands lies the moral and social responsibility to moderate and keep expanding the purview of what’s seen as graphic and harmful.

(At The Quint, we question everything. Play an active role in shaping our journalism by becoming a member today.)