Deepfake technology involves using artificial intelligence (AI) to generate convincing images or videos of made-up or real people. It is surprisingly accessible and has been put to various uses, including in entertainment, misinformation, harrassment, propaganda and pornography.

Researchers Renée DiResta and Josh Goldstein of the Stanford Internet Observatory found that this technology is now being used to boost sales for companies on LinkedIn, according to an NPR report.

The researchers found over a thousand profiles with AI generated profile pictures on LinkedIn. The technology likely used is generative adversarial network (GAN) which pits two neural networks against each other.

LinkedIn says it has since investigated and removed those profiles that broke its policies, including rules against creating fake profiles or falsifying information, the publication reported.

The investigation began when DiResta received a message from a seemingly fake account. The eyes were aligned perfectly in the middle of the image, the background was vague, and one earring was missing.

Delhi Based Firm Offers AI Generated Profiles

One of the lead-generation companies that LinkedIn reportedly removed after Stanford Internet Observatory's research was Delhi-based LIA.

The company offered hundreds of "ready-to-use" AI generated avatars for $300 a month each according to LIA's website, from which all information was recently removed, the report mentioned.

“It’s not a story of mis or disinfo, but rather the intersection of a fairly mundane business use case with AI technology, and resulting questions of ethics and expectations,” DiResta wrote in a Tweet.

"What are our assumptions when we encounter others on social networks? What actions cross line to manipulation?" she added.

Recently, a deepfaked video made an appearance on social media, in which Ukrainian president Volodymyr Zelenskyy appeared to ask Ukrainian troops to lay down their arms.

It's not very convincing, but deepfakes are getting better and people seem to be getting worse at identifying them.

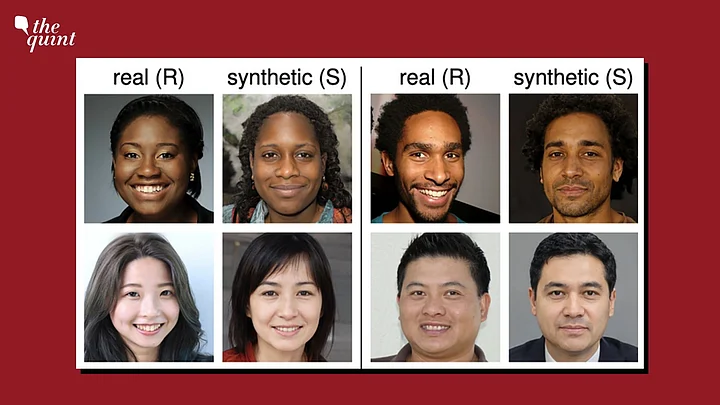

A recent study published in the Proceedings of the National Academy of Science found that people have just a 50 percent chance of guessing correctly whether a face was generated by artificial intelligence. AI synthesised faces were found to be indistinguishable from real faces and, somehow, more trustworthy.

(With inputs from NPR)

(At The Quint, we question everything. Play an active role in shaping our journalism by becoming a member today.)