All Eyes On Rafah — Will Our Activism Also Be AI-Generated Now?

Is AI sanitising outrage, diluting the gravity of real-world atrocities with a stream of perfectly curated anger?

advertisement

One grainy black-and-white photo shook the world in 1972. Nick Ut's photograph of the ‘Napalm Girl’ during the Vietnam War was not aesthetically pleasing. It was raw, a searing indictment of war's brutality. Fast forward to today, and social media activism often leans towards the other extreme: perfectly composed images, crafted with algorithms to elicit a quick share and forget.

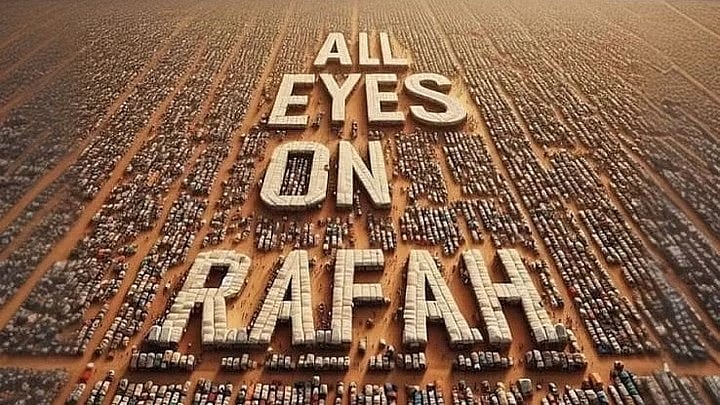

The violence in Palestine has been simmering for months now. The atrocities have been documented in images and videos that are surfacing from there. Yet, these visuals failed to spark the widespread social media outrage that an AI-generated image of ‘All Eyes on Rafah’ did, garnering nearly 47 million shares to date. What does this phenomenon tell us about the nature of online activism? Is it more about manicured aesthetics than sustained engagement with complex issues?

This is the unsettling reality of AI-generated activism, where algorithms churn out petitions, slogans, and social media content in the name of social justice. While it promises democratisation and virality of movements, a closer look reveals a potential pitfall: is AI sanitising outrage, diluting the gravity of real-world atrocities with a constant stream of perfectly curated anger?

The Visceral Impact of First-hand Suffering Cannot Be Replicated by Algorithms

The trajectory of many historical events has been altered by real human images that captured our collective attention and evoked profound emotional responses. The ‘Falling Man’ from the World Trade Center collapse or the searing images of overflowing cremation grounds during the second COVID-19 wave were not aesthetically pleasing pictures. They were raw, disturbing glimpses into human suffering, forcing us to confront uncomfortable realities.

These images lacked the calculated aesthetics of AI-generated content. Algorithms lack nuance. The ‘All Eyes on Rafah’ image exemplifies this perfectly. The image is overly simplistic and lacks context, highlighting the danger of AI activism: a sanitised representation of suffering that may evoke a mild sense of outrage without prompting deeper empathy or action. Sharing it gives us a sense of righteous participation. But what next?

The visceral impact of seeing suffering firsthand, hearing personal testimonies, and witnessing the consequences of inaction cannot be replicated by algorithms. The raw emotion captured in these images moved people to donate, volunteer, and advocate for better response mechanisms. This level of engagement and solidarity is difficult to achieve with AI-generated content designed for passive consumption.

The real images coming from Gaza are of parents identifying their son by matching his photo with a skull, of fathers giving chocolate to their dead children, and of people looking through the rubble to find the bodies of their loved ones. All these harrowing images are reflective of the magnitude of the horror that is happening in Gaza.

Social Media Sharing Often Devolves Into a Mindless Click-fest

AI-generated activism's propensity to create content tailored for maximum clicks and minimal discomfort can dilute the gravity of real-world atrocities. The sanitised, curated nature of AI-generated images and messages may fail to convey the full horror and urgency of certain situations. This can result in a desensitised audience accustomed to consuming bite-sized, aesthetically pleasing representations of suffering that do not evoke the necessary emotional response that drives real change.

While AI-generated content is easier to share and has the concept of virality, it is this ease that becomes the problem in the long run. Sharing a powerful image or petition feels good. It is almost like a momentary act of solidarity that absolves us of the need for further engagement. This replaces real action with a performative click, creating the illusion of progress without the messy reality of getting our hands dirty.

While AI-generated content can be the first step, true change comes from individuals organising marches, donating funds, and engaging in difficult conversations about systemic issues. AI content may have served as a catalyst, but it is always human action and connection that fuel socio-cultural or political movements.

Furthermore, AI's limitations in comprehending complex issues pose a significant challenge. Social justice movements often grapple with historical context, cultural nuances, and the need for on-the-ground solutions. Algorithms struggle to grasp these complexities. An AI-generated message about a conflict in a developing nation might miss crucial cultural references or historical injustices that are central to understanding the situation. The Israel-Palestine conflict is rooted in decades of colonialism, racism, and religious issues. A lack of depth in understanding these issues bears the risk of oversimplifying them and undermining the effectiveness of activism.

The Fight for Social Justice Requires More Than Just Perfectly Curated Outrage

That being said, the potential benefits of AI activism should not be entirely disregarded. Algorithms can automate tasks like generating petitions or spreading awareness, freeing up human activists to focus on more nuanced and strategic work. However, it’s crucial to view AI as a tool and not as a replacement for human empathy and action. To put this into context, the ‘All Eyes on Rafah’ image could be used as a first step, but it has to be supplemented with a strong call to action like where to access more information, how to donate for relief and mobilisation, and so on.

Ultimately, the fight for social justice requires more than just perfectly curated outrage. It necessitates messy human connection, a willingness to engage with complexity, and a commitment to real-world action. AI might be a powerful tool in this fight, but it can never replace the raw emotions, empathy, and perseverance that spark genuine social change. Let us leverage technology while holding ourselves accountable for deeper engagement. In the fight for a better world, clicks alone won't be enough, but informed action combined with awareness can make a real difference.

(Farnaz is currently working as a Senior Copywriter in the advertising sector, and interested in exploring the intersections of gender, mental health, and popular culture through my writings. This is an opinion piece and the views expressed are the author’s own. The Quint neither endorses nor is responsible for them.)

(At The Quint, we question everything. Play an active role in shaping our journalism by becoming a member today.)

- Access to all paywalled content on site

- Ad-free experience across The Quint

- Early previews of our Special Projects

Published: undefined